RobiButler: Remote Multimodal Interactions with Household Robot Assistant

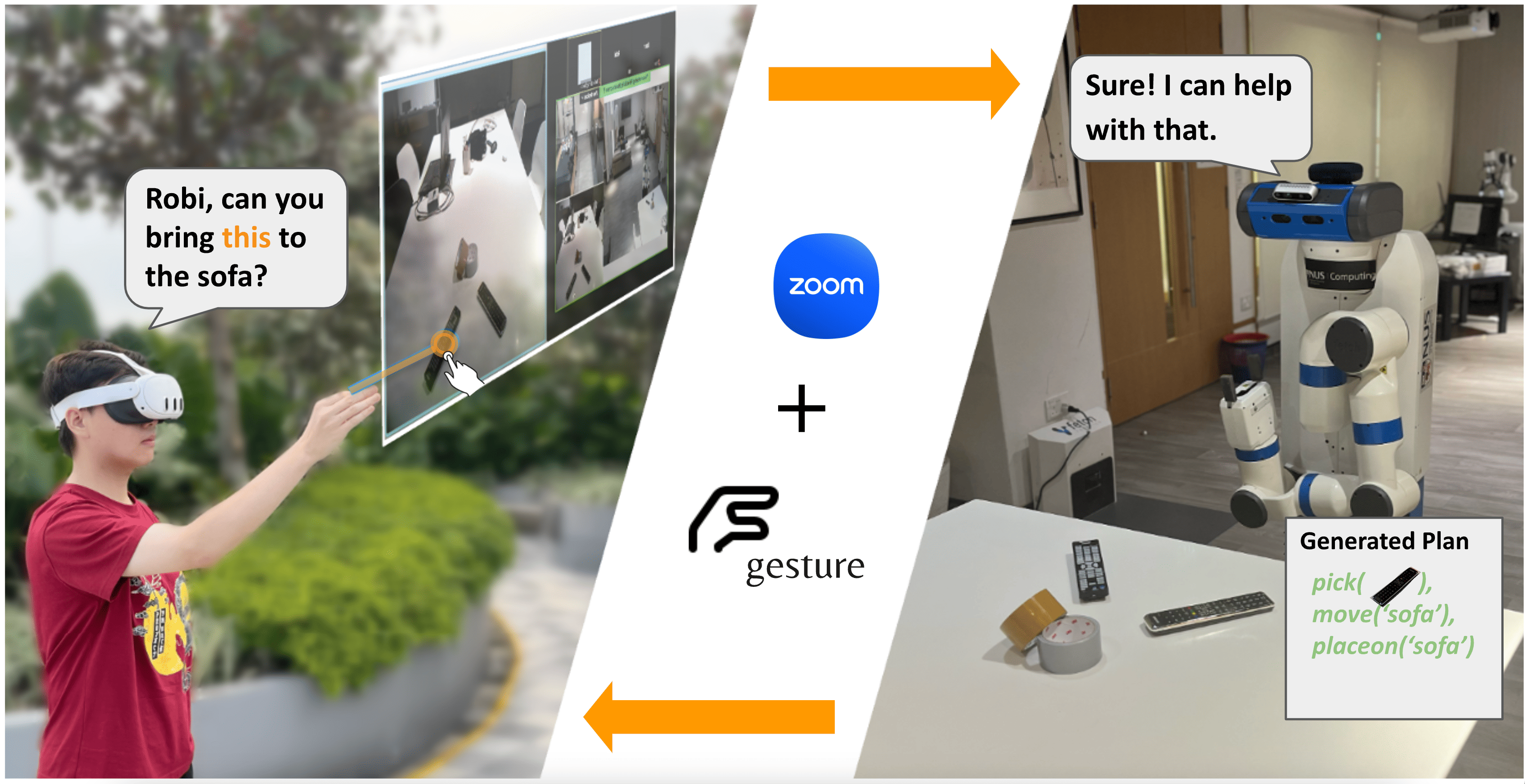

Abstract: Imagine a future when we can Zoom-call a robot to manage household chores remotely. This work takes one step in this direction. RobiButler is a new household robot assistant that enables seamless multimodal remote interaction. It allows the human user to monitor its environment from a first-person view, issue voice or text commands, and specify target objects through hand-pointing gestures. At its core, a high-level behavior module, powered by Large Language Models (LLMs), interprets multimodal instructions to generate multistep action plans. Each plan consists of open-vocabulary primitives supported by vision-language models, enabling the robot to process both textual and gestural inputs. Zoom provides a convenient interface to implement remote interactions between the human and the robot. The integration of these components allows RobiButler to ground remote multimodal instructions in real-world home environments in a zero-shot manner. We evaluated the system on various household tasks, demonstrating its ability to execute complex user commands with multimodal inputs. We also conducted a user study to examine how multimodal interaction influences user experiences in remote human-robot interaction. These results suggest that with the advances in robot foundation models, we are moving closer to the reality of remote household robot assistants.

For more details, visit https://robibutler.github.io

Team

- Anxing Xiao

- Nuwan Janaka

- Tianrun Hu

- Anshul Gupta

- Kaixin Li

- Cunjun Yu

- David Hsu