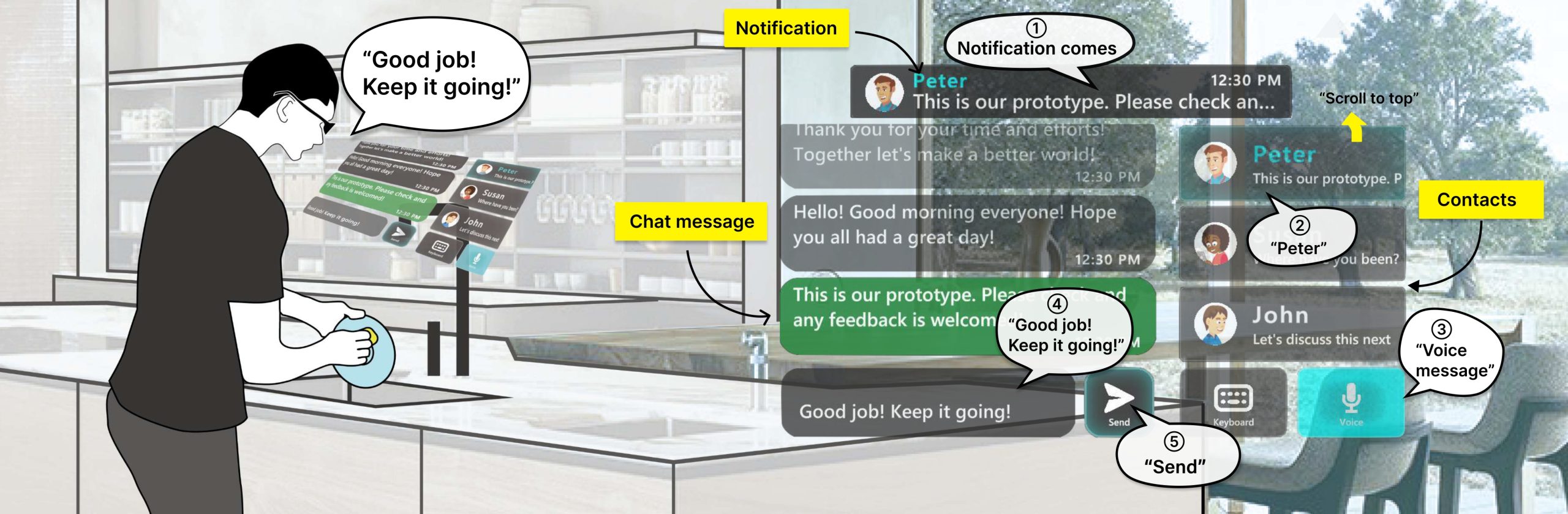

GlassMessaging: Towards Ubiquitous Messaging Using OHMDs

Abstract: Communicating with others while engaging in simple daily activities is both common and natural for people. However, due to the hands- and eyes-busy nature of existing digital messaging applications, it is challenging to message someone while performing simple daily activities. We present GlassMessaging, a messaging application on Optical See-Through Head-Mounted Displays (OHMDs), to support messaging with voice and manual inputs in hands- and eyes-busy scenarios. GlassMessaging is iteratively developed through a formative study identifying current messaging behaviors and challenges in common multitasking with messaging scenarios. We then evaluated this application against the mobile phone platform on varying texting complexities in eating and walking scenarios. Our results showed that, compared to phone-based messaging, GlassMessaging increased messaging opportunities during multitasking due to its hands-free, wearable nature, and multimodal input capabilities. The affordance of GlassMessaging also allows users easier access to voice input than the phone, which thus reduces the response time by 33.1% and increases the texting speed by 40.3%, with a cost in texting accuracy of 2.5%, particularly when the texting complexity increases. Lastly, we discuss trade-offs and insights to lay a foundation for future OHMD-based messaging applications.

For more details, visit https://github.com/NUS-HCILab/GlassMessaging

Team:

- Nuwan Janaka

- Jie Gao

- Lin Zhu

- Shengdong Zhao

- Lan Lyu

- Peisen Xu

- Maximilian Nabokow

- Silang Wang

- Yanch Ong